Building SANDS at KAUST

We live in a connected world where networked systems play an increasingly important role. These systems, which are the foundational pillars of our modern digital lives, are the result of some remarkable technological advancements and progress in computer science over the past three decades.

Yet despite all the advances in computer science and research in computer-based systems, we still have an immature understanding of how we should design and operate such systems based on first principles that make systems dependable, energy-efficient, easy to manage and future-proof. Conversely, systems are increasing in both size and complexity and are bound to scaling-up to accommodate ever-larger numbers of components and users. We are still far from mastering a science for building systems, and we are now experiencing radical technological trends and paradigm shifts that require us to revisit our previous decisions and designs.

A new alternative or solution to Moore's Law—which has sustained decades of scaling—is needed. A growing body of data needs to be analyzed and new services are required to connect more people and devices.

Improving how we compute

Marco Canini is a computer scientist and an assistant professor in the KAUST Computer, Electrical and Mathematical Science and Engineering Division, and his research goal is to solve some of the aforementioned problems and attempt to decode these interconnected networked systems.

Canini's work is focused on improving networked-system design, implementation and operation along vital properties like reliability, performance, security and energy efficiency. A particular thrust of his research centers around the principled construction and operation of large-scale networked computer systems, and in particular the development of Software-Defined Advanced Networked and Distributed Systems (SANDS).

"I work on distributed systems and networking and specifically at the intersection of these two sub-communities—it's something I like to call networked systems," Canini said.

"Not everyone is conscious of how modern lives in the digital era operate because we are all so used to convenient online services—services like search, file sharing and other interactive applications that are interconnected and that regulate many aspects of our lives. These services are possible due to technological developments in terms of what I define as systems infrastructures: compute, storage and networking. People may not really perceive how critical and how important this systems infrastructure is, but it really is the pillars of what supports all of our digital lives," he said.

The shortest path to becoming a computer scientist

Outside of his academic career, Canini has worked for Google, Intel and Deutsche Telekom. This exposure to industry and academic centers of excellence served to fuel Canini's passion for becoming the best possible computer scientist.

"Many environments have shaped my own taste for research and problems. I have been very fortunate in my path because I had the privilege of being exposed both to academia, centers of excellence and industry. Through this exposure, I could always see combining the idea of technology into practical products," Canini said.

"The exposure to these companies and institutions has really helped with the context and the excellent people I met. Thankfully, because of the successes I have had, I have had exposure to many other subfields of computer science, such as security, formal methods, machine learning, optimization theory, software engineering and programming languages that really happened because of this path that I took," he added.

Building systems worthy of society's trust

Assistant Professor Marco Canini works on distributed systems and networking and specifically at the intersection of these two sub-communities. Photo courtesy of Shutterstock.

To develop something that has not only a theoretical insight but also has an impact in some form and that is highly rewarding and satisfactory is a major drive for Canini in his research goals and career. Taking this drive as far as it can go is also challenging, as it requires many factors to coalesce at the same time.

"I have had this desire to expand and evolve my research with computer systems, because not only are they highly complex systems, but they are also exceedingly important. If you look at what the Internet has achieved in just over 20 years—it's so remarkable. The internet is probably one of the most complex—if not the most complex system—that humanity has created. It is really a remarkable success story. It shows just how tremendous an impact and one object can create, and that for me is very interesting to study," he said.

"We must strive to ensure our research is aligned with what the world needs and whether you have the right solution at the right time. I am excited for the ultimate goal of my work, which is to distill fundamental principles towards enriching our knowledge on how to build scalable, dependable and future-proof systems worthy of society's trust," Canini noted.

Remarkable progress in computer science

Even though we have had remarkable progress in computer science and in systems in particular, we still don't have a constructive theory of how you can design and build a system that is going to work the way that it is meant to—a system that will achieve whatever you set out to achieve.

"In a way, computer science is the opposite of civil engineering—you set out to design a bridge, you know what it is going to do and whether it is going to stand and you have stability. In computer science, we don't have this stability, and there is evidence that we are far away from having any constructive theory," Canini said.

"The vision for the work we are doing at KAUST is to make it easy to produce and manage key networked systems that society trusts and that can achieve specific objectives. Our team strives for impact. In some cases, we are concerned with high performance and scalability, but it's not just about making things fast; they must also be dependable and future proof. We also want to ensure predictability of the performance. We want to create higher reliability and lower the complexity of managing large-scale systems, because the bigger the system, the harder it is to control it. When you reduce the complexity or remove an obstruction, this is a very powerful thing—we strive to learn the general principles and lessons of what really works in practice," he noted.

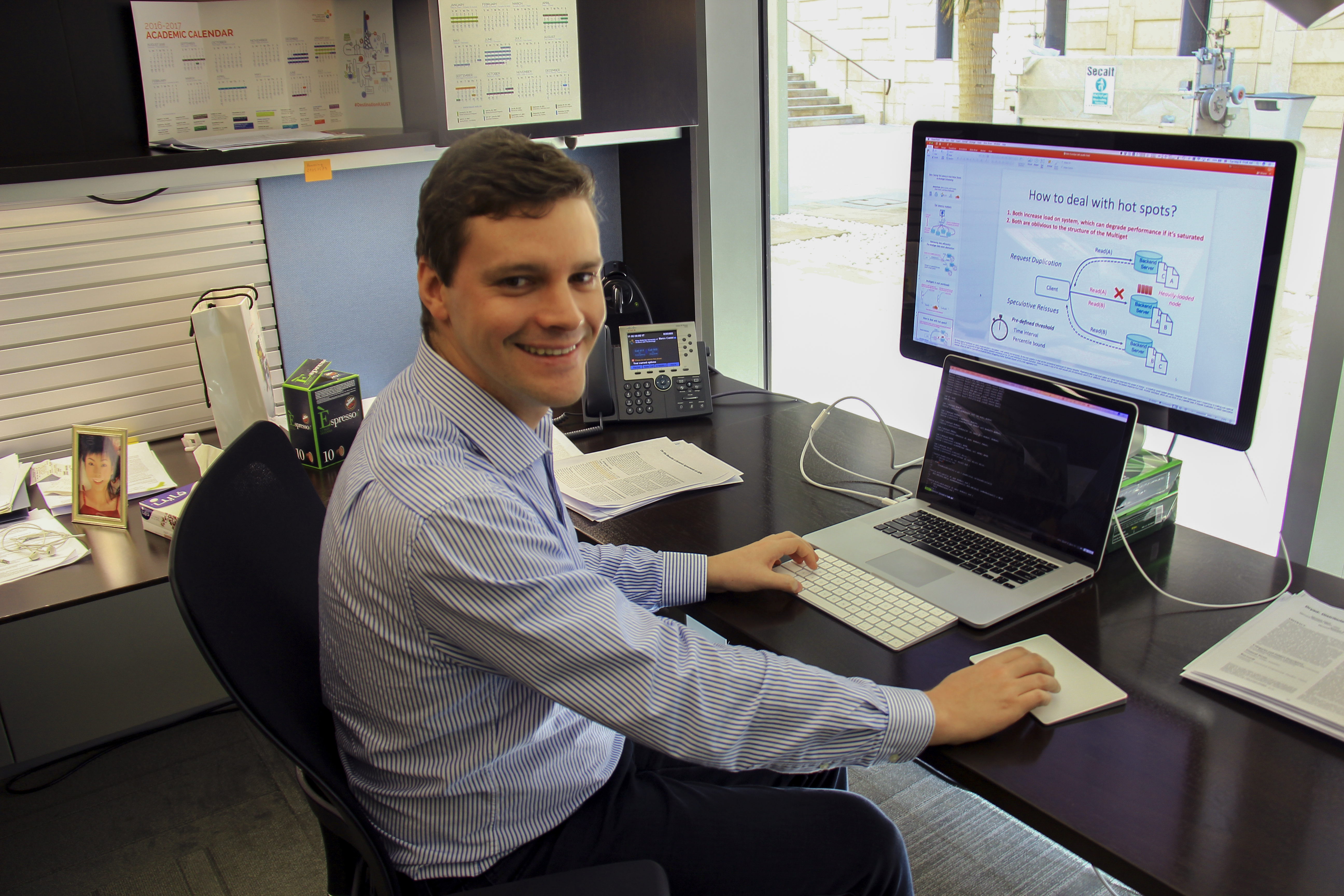

Assistant Professor Marco Canini’s work is focused on improving networked-system design, implementation and operation. Photo by Meres J. Weche.

Complexity—the hardest challenge

The typical systems research deals with many challenges, but the number one challenge is complexity. Most of Canini's time is devoted to understanding and unraveling the many complexities and nuances that computer systems entail.

"Complexity is the hardest challenge that we face because these computer systems are very complex and they are very hard to scale to extremely large numbers of components and users. We really don't understand all the different interconnections, so it is similar in a sense to a biological system, because we can get a grasp of the individual components but don't quite know how it all works once pieced together," Canini acknowledged.

The 'three Ds' of SDN

For the past several years, Canini's research has been towards developing foundations towards software-defined networking (SDN). This is achieved through advancements in what he calls the "three Ds" of SDN, which are development, debugging and deployment. In view of the fact that networks have become programmable systems, one has to figure out the programming abstractions that are the correct ones so that one can use these programming abstractions to efficiently develop important and useful applications in the network and with minimal effort.

"Recently there has been a new shift of paradigms for networking, which is something we call SDN. With SDN, we are allowed to design networks that are tailored to application needs. The way that we do this is by making the network programmable, meaning that we can program the network, we can adapt it to be more flexible and we can evolve it with new and changing application requirements. In a sense, it means we can now make networks that are more reliable, flexible and secure," Canini explained.

"There is a bit more to our research, however," he continued. "It is just not only SDN—we also work intensely on data centers and cloud computing problems, where our goal is towards enabling predictable performance when running in this kind of environment, because many applications in the data centers are actually distributed applications and are made from a collection of resources and face many sources of performance fluctuations. They demand predictable performance."

"It is also critical to having consistently low latency in practice and not just the occasional average case, but the latency for the say 99 percent of the cases to be low. It is very important from an economic perspective and a usability perspective," he noted.

"Take for example Amazon: they showed that if they were to increase the latency of how they serve the traffic by 100 milliseconds, it would cost them one percent in sales. It's very important for companies and businesses to have low latency," he added.

Addressing the growing computing and data demand

Any approach that Canini and his colleagues might adhere to is rendered more difficult by two ongoing trends in computer science. The first trend is that the demand for computing has been exploding over the past few years, and this growing demand has accelerated the need to build massive data centers globally to cope with it. Coupled with this demand is the need to support new applications.

"These new applications are getting more interactive, and they are getting more data that they need to process, so there's more stringent latency requirements to be able to support them. New applications come with different requirements, and the network itself is something that up to this point was not designed with 'evolvability' in mind. It was not designed to be easily extendable," Canini said.

"This is a hard trend to cope with, because also the underlying efficiency of the hardware is in a sense diminishing, especially with respect to how our ability to make things run faster on a single CPU thread. As a result of the ineffective hardware, we need to involve multiple cores and higher parallelisms—all things that make the process harder for people," he added.

"The second trend is data, and namely the amount of data we generate and consume. This volume of consumption is only getting bigger, and the need to process this data is ever-growing. It has been said that we have already entered the so-called 'zetabyte era.' There are many reasons why we have entered this era, including the explosion of mobile internet and social media: broadband—which has increased in speeds and the ability to carry mobile devices that generate thousands of pictures and videos; and the new generation of video and streaming and media distributions. This level of data consumption is expected to continue, and also the internet of things (IoT) is developing, which will connect literally billions of sensors and devices."

"People are really struggling with all this data, and it's not good to collect data for the sake of it. What I derived from these observations is that the systems and decisions we have today were made in the last decade. The older systems can hardly cope with today's scale and volume and velocity, let alone be tasked with this volume in the future. At KAUST, our team wants to design and reach new techniques. We want to create solutions that can improve performance by 10 times or 100 times in the way we solve particularly tough problems," he said.

Dealing with network errors

When dealing with such complicated systems and networks, errors are sure to occur that can create major outages, errors in communication, execution and processes, etc. Due to the increased interconnectivity in today's modern society, people tend to notice when these network faults happen. When these faults happen, they can create massive knock-on effects for companies, affecting the users who with and consume their services. Canini drew attention to some of the more celebrated networking faults in the recent past.

"There have been major outages episodes in the past; for example, with Amazon Web Services (which is the most popular cloud provider) when it incorrectly executed a network change. During a planned network capacity upgrade, there was a major outage that broke down a lot of services within Amazon. I believe the outage could have been traced back to just one individual—to a singular human error in the command chain," Canini said.

"Despite everything, people can still manually cause errors or misconfigurations, and that again is another source of big problems. People can have a hard time trying to really figure out how these complex systems can all interact. A few years back, network connectivity issues also disrupted GitHub (a very popular service among developers) and United Airlines, entirely disrupting their airport processing and ticket reservation systems. Most recently, Delta Airlines had to ground all of their flights due to some computer system problem for several hours," he added.

Marco Canini is a computer scientist and an assistant professor in the KAUST Computer, Electrical and Mathematical Science and Engineering Division. Photo by Meres J. Weche.

New solutions for traditional problems

However advanced they may be, SDNs can also suffer from traditional problems, such as distributed denial-of-service attacks (DDoS). DDoS are attacks where the attacker aims to exhaust resources—whether these are the network-bandwidth or compute or the storage, and these attacks are getting increasingly ever more powerful, due in part due to insecure devices deployed as part of the IoT that attackers can harness to create many hundreds of gigabits/s of attack traffic.

"The way that software-defined networking can help in better securing networks is that it gives the programmability of the network. We have these points of interconnection on the internet called internet eXchange Points (IXPs) that can deploy software-defined networks. In this example, suppose that we have a victim of some attack or some traffic and we want to prevent this attack-traffic to reach the victim. In a traditional network, as a victim I could basically do this by saying I don't want to be reachable by the autonomous system from where the attack traffic enters my network. However, what I would do is that I would effectively cut it out. There would be some legitimate traffic and some attack traffic, and I would stop everything—which is not what I want," Canini stated.

"With the software-defined networking—because I can program the network rules myself—I could actually have the victim detect specifically which traffic is the attack one and inform a software-defined IXP to stop and block specifically only that traffic, thus preventing that attack to go and propagate further."

In terms of further real-world applications for his research, Canini highlighted a recent project he is working on at KAUST.

"One particular project I can highlight is one that we have done for cloud data stores. This project started before KAUST, but we are continuing it here. The project is about reducing the latency in cloud data stores. Not every server is as fast as the other one and this changes overtime, but we exploit the fact that there is server redundancy and multiple copies of each data element. To address this, we built an adaptive replica selection technique and implemented it in a system called Apache Cassandra, an open source storage system used by many companies. We demonstrated that we can reduce the latency at the tail of the latency distribution, that is, the 99.9 percentile by a factor of three, which is a significant factor," Canini said.

"This was an important study and we collaborated with two companies—Spotify and Soundcloud—where a prototype of this load balancing technology was actually tested on their test beds. Load balancing refers to choosing the server so that you are doing a good job to balance the load. Spotify today has something they call the expected latency selector, which has been influenced by the approach that we followed. This shows in a sense the impact that we have had and can have with this technology," he noted.

- By David Murphy, KAUST News